Our results depicts minimum speed-up19x of bitonic sort against oddeven sorting technique for small queue sizes on CPU and maximum of 2300x speed-up for very large queue sizes on Nvidia Quadro 6000 GPU architecture. All algorithms have been implemented exploiting data parallelism model, for achieving high performance, as available on multi-core GPUs using the OpenCL specification. Alongside we have implemented novel parallel algorithm: min-max butterfly network, for finding minimum and maximum in large data sets. This paper presents a comparative analysis of the three widely used parallel sorting algorithms: OddEven sort, Rank sort and Bitonic sort in terms of sorting rate, sorting time and speed-up on CPU and different GPU architectures. The GPU sorting algorithms provides quick and exact results with less handling time and offers sufficient support in real time applications. The main result of MMDBM is used to compare the classifier with an existing CPU computing results and GPU computing results. In this paper, we have discussed an efficient parallel (quick sort and radix sort) sorting procedures on GPGPU computing and compared the results of GPU to the CPU computing. We used the parallelized algorithms of the two sorting techniques on GPU using Compute Unified Device Architecture (CUDA) parallel programming platform developed by NVIDIA Corporation.

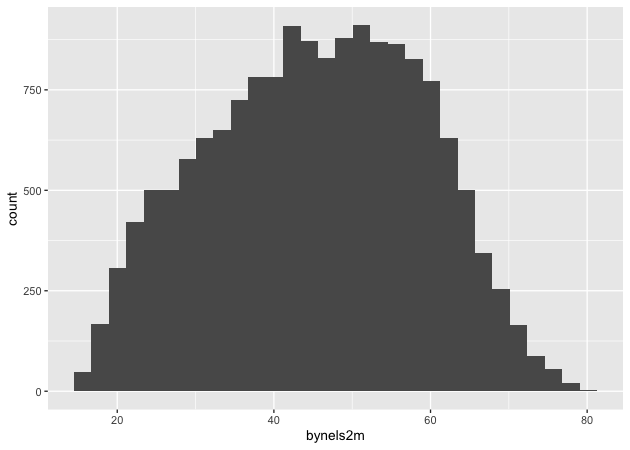

HISTOGRAM DIM3 GRIDDIM CODE

The MMDBM classifier has been implemented in CUDA GPUs and the code is provided. This classifier is suitable for handling large number of both numerical and categorical attributes. “Active” threads is what matters…Īctive threads refer to the number of threads that the multi-processor simulatneously execute at any given point of time.A decision tree classifier called Mixed Mode Database Miner (MMDBM) which is used to classify large number of datasets with large number of attributes is implemented with different types of sorting techniques (quick sort and radix sort) in both Central Processing Unit computing (CPU) and General-Purpose computing on Graphics Processing Unit (GPGPU) computing and the results are discussed. People have reported best performance even with 64 threads per block. This does NOT impose any condition on your block size. One just needs to work on this bottomline to adjust his block and grid size to get the optimal performance for any given hardware. At run time, each Multi-processor should have atleast 192 ACTIVE threads (or 256 best) active to hide latencies.One needs to work out the “CUDA occupancy” of the kernel and make sure that the following bottom line is met: The hardware factor does have a role to play. (the hardware factor has a role… read on…)Īlso note that – your statement allows 128 threads per block and multi-processor number of blocks - In this configuration, you will suffer from register latencies and probably also by global memory latencies.

It all depends on how the parallel programmer chooses to write his kernel and how he decomposes the data among blocks. Grid size and block size are chosen by programmers. But better use gridDim would be the total number of multiprocessor in the device and for better use the blockDim should be 128,256,or 512… but 256 is working fine for any type of device… The grid size and block size are hardware dependant i.e it is dependant on the device used. This will let your code scale nicely across all devices, including future ones, and is a good balance of occupancy and resource usage on current ones. A good configuration is: use blocks with 128 threads, 32 registers per thread, and up to 4KB shared memory per block. Also, gmem accesses may be slightly faster if there is one block per multiprocessor (and the accesses are perfectly optimized).įor your type of algorithm, I don’t think having a maximally large block or using a hundred registers has any advantage. However for certain algorithms the more shared mem and the more registers you have per block, the better (a good example is matrix-multiply). No reason for the runtime to automate this. If you use small blocks, it will run just fine on a larger device (running several blocks per multiprocessor). I think what i am make him understand is:Įfficiency does not come from using blocks of maximal size. Usually more number of blocks (10,000 or 20,000) gives higher performance – of course blocks should have something meaningful and sizeable to do.

You can still have a blockDim.x as 64 and have a bigger gridDim.x – if your CUDA occupancy allows a minium of 3 active blocks (3*64=192). You would need that much threads minimum to cover your hardware latencies. Multiply this with the number of multi-processors in the hardware. A minimum of 192 threads (better 256) are required to be active per Multi processor.

0 kommentar(er)

0 kommentar(er)